Last month, REDD reemerged in headlines. A group of 11 authors led by Edward Mitchard of Space Intelligence called for a retraction of research that was published earlier this year by West et al. in the peer-reviewed journal Science, and picked up by the Guardian. Importantly, this new analysis is still undergoing peer review, and until that happens we wouldn’t consider the findings ready for prime time.

We suggest waiting for a final (peer-reviewed) text before assessing the impact of this new scientific research, but as the public discussion has already begun, we are contributing some early thoughts. The unpublished study raises three major concerns.

-

The authors claim the West et al. study used inappropriate data to determine deforestation rates, and that those data were then used to pair project areas with their synthetic controls. They assert that, as a result, avoided deforestation may have been underestimated by 17-90%.

-

They state that the West study’s synthetic controls were not well chosen and that better validation procedures would have revealed the failures of the model and allowed a correction.

-

The authors believe the study had calculation errors that resulted in an underestimation of the number of “real” credits the REDD projects produced. They suggested the real number was 10% of the project’s original claim when the study had it at 6%.

Dealing with the last point first: whether 6% or 10% of the credits issued are real is immaterial. The point of the West et al. study is that a large fraction (over 90%) of the credits issued by the 24 projects would not have been issued if the baselines had been set according to the rates observed in their synthetic controls. The authors of the unpublished study suggest the approach in the West study is flawed and leads to poor baselines that end up misrepresenting the effectiveness of REDD projects. The new study does not opine on the appropriateness of the original baselines. They also do not propose an alternative set of baselines. We cannot tell if they think the 24 projects that were analyzed in the study have reasonable baselines or not.

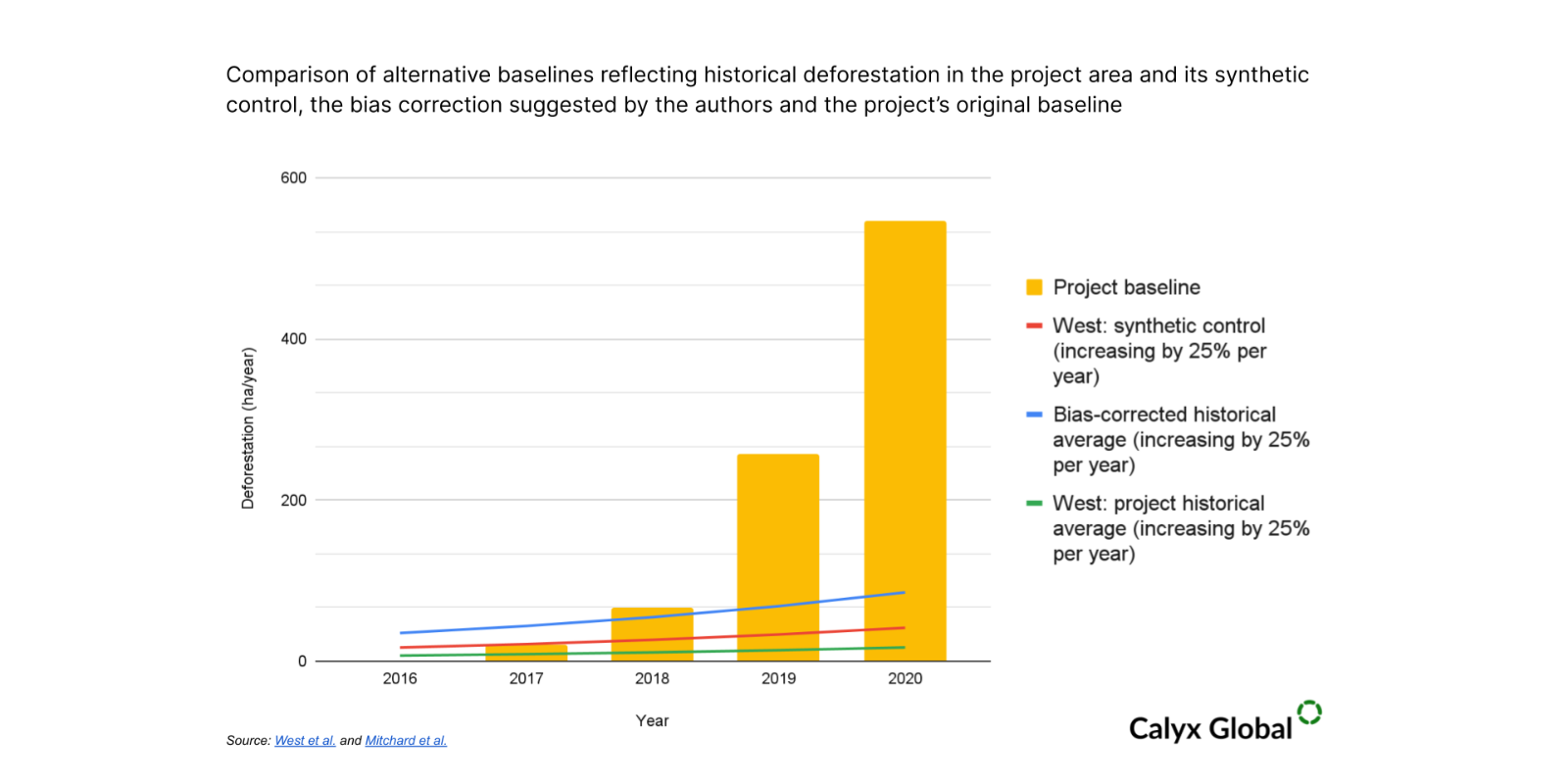

Our analysis of almost 70 REDD projects, using very different approaches than West et al., but all based on historical data on deforestation near the project, suggests that many of the baselines are substantially overestimated. For example, the authors cite VCS 1900, a project in Tanzania, as demonstrating the failure of the West model that generates the synthetic control (SC). They show that deforestation in the SC (about 18 ha per year in 2016) diverges by over 100% from deforestation in the project area (about 7 ha per year). They suggest this level of difference disqualifies the SC. Meanwhile, the project has chosen a baseline of 21 ha in 2017, 67 ha in 2018, 257 ha in 2019 and 547 ha in 2020 (see figure; these numbers are for wooded areas only, they also have a much higher baseline that includes the loss of shrubbier areas).

The authors say deforestation can be underestimated by a factor of five, so the historic rate of 7 ha could represent 35 ha. Assuming they are correct, that means the project’s baseline diverges from the historic average starting in 2018, growing from roughly twice as high (67 vs 35) to roughly 15 times as high (547 vs 35). Deforestation pressure is increasing, no doubt. But is it increasing by a factor of 15 over five years? The authors’ concern over the synthetic control approach is valid, and points in the direction of improving the method. But to focus on problems with that approach is to miss the larger worry: the way baselines are currently produced results in substantial overcrediting.

Let's visualize the difference between the baselines mentioned above. In the figure below the green line begins with the average historical deforestation rate in the project area of VCS 1900 according to West et al. The red line starts with the rate observed in West et al.’s synthetic control for this project. The blue line represents the authors’ contention that deforestation is underestimated by a factor of 5. The authors are neutral on the comparison between the starting points of the green, red, or blue lines and the yellow bars which indicate the baseline deforestation rates claimed by the project. Since 2001, deforestation in the project area increased year-on-year by at most 100% (from 2 to 4 ha/yr). In the six years before the project began, the deforestation rate increased by a maximum of 20%. It did not increase at all for the four years leading up to the project’s crediting period (see Mitchard et al. figure 2). The lines reflect the three historical rates increasing by 25% per year, a rate of increase greater than observed. The project baseline (yellow) increases by 100 - 300% every year.

The notion that West et al.’s approach underestimates avoided deforestation by 17-90% really turns the debate on its head. How do we calculate avoided deforestation? We take the difference between what would have happened in the absence of the project (the baseline) and what did happen once project activities were implemented (observed deforestation). We cannot ‘measure’ avoided deforestation because we can only infer it. The counterfactual baseline is estimated, not measured. The only way to underestimate avoided deforestation is to either overestimate observed deforestation (subtract too much) or underestimate the baseline (allow for too little in the baseline scenario). West et al.’s approach may result in low baselines, but to imply that therefore avoided deforestation is underestimated is false.

Some criticisms of the synthetic controls seem valid - controlling for biome type, land use, protected status and drivers of deforestation should make it easier to find a reasonable match. However, the beauty of the synthetic control approach is that if the deforestation rate and pattern match that of the project area – by whatever twist of market and ecological interconnections – then the synthetic control can be used to predict what will happen in the project area. This approach, like all baseline approaches, works only to the extent that the past is predictive of the future. (This is why Verra has reduced the crediting period from 10 years to 6 in the new consolidated REDD methodology – because the past only predicts the future up to a point.) West et al. used a methodical, rule-based approach to determine that the rate and trajectory of deforestation were well-matched and rejected cases where they were not. Mitchard et al. suggest they should have done more tests and tweaked their model, but it cannot be overlooked that the reviewers at Science thought they did an okay job.

Despite the roller coaster of opinions on REDD, the issues being debated in this recent submission are actually fairly subtle and arguably miss the point. Anyone who has studied REDD, including us, will tell you that most projects overestimate their impact. The argument is really over how much. And the challenge for us all is to be conservative in estimating a counterfactual baseline about which we will never have 100% certainty.

Get the latest delivered to your inbox

Sign up to our newsletter for the Calyx News and Insights updates.